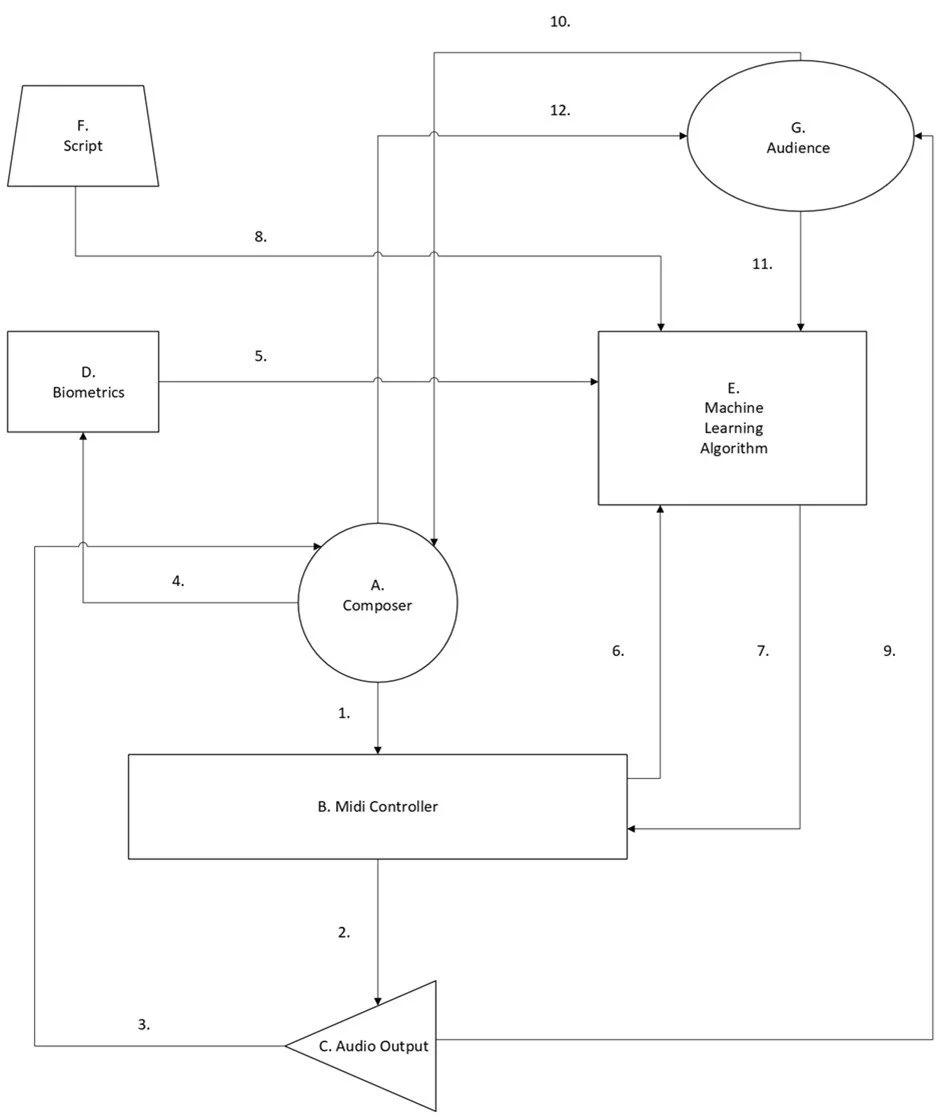

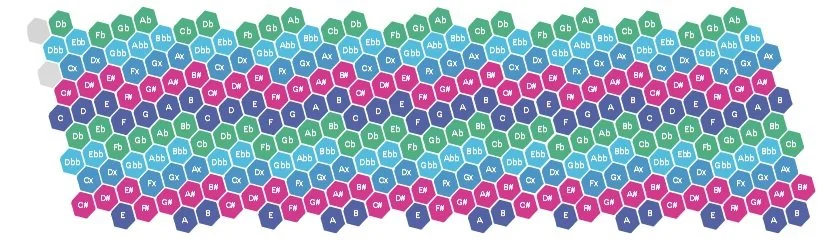

I am currently involved in a project to design and build an instrument that elicits the concept of ‘disrupted creativity’. This instrument is an artistic autoethnographic work, exploring the my ‘orphaning’, i.e. removal from my mother at birth. Through the use of facial emotion recognition and machine learning algorithms, a third party (in this case, an artificial intelligence) interferes with the composer’s creative process by intervening and hijacking control of the instrument from the composer. The third party then attempts to enforce its emotional priorities onto the composer and their art. This it does by swapping around pitch mapping on a Lumatone that has been mapped with 31 Equal Divisions of the Octave (31EDO). The third party then evaluates the emotional state it is inducing through facial feature recognition. Based on pre-determined scores/scripts, the third party then changes the pitch mapping further. This disrupts the emotional dyad of the artist and their art, evoking the separation of mother and child. These modern elements are juxtaposed with those of early music, including: the emotional affect associated with the tuning temperaments of the 16th to 18th centuries; and the pedagogical practices of the Neapolitan Conservatori, which taught orphans to become some of the most influential musicians of their era.

Here are some images associated with this project:

Exploring Personal Trauma Through Disrupted Creativity:

Conceptual outline of the Instrument.

Schema of the Instrument.

31EDO Bosanquet mapping onto a Lumatone keyboard. Taken from: https://www.lumatone.io/support

Using FaceOSC to recognise my facial features. (see: https://josephlyons.gitbook.io/face-control-digital-toolkit/tools/faceosc)